Written by Henrik Linusson, Data Scientist

What does it really mean to run machine learning on the edge? Over the last five years, Ekkono’s researchers, engineers, and developers have been working hard to bring smart functionality to small hardware platforms. In this blog post, I would like to give you a small glimpse of what’s possible to achieve with our purpose-built machine learning software library, designed with portability and ease-of-use in mind from the very first line of code.

Edge Machine Learning

At Ekkono, we’re all about pushing smart functionality as far out on the network edge as possible. The potential benefits of analyzing data close to the source, rather than uploading it to the cloud, are many: faster response times, increased security and privacy, and improved energy efficiency, to name a few. Back in June, one of our data scientists, Eva Garcia Martin, wrote about some of the challenges of designing and building machine learning software for edge devices, and how those challenges influence our R&D processes. Here, we will elaborate further on two of the design principles Eva outlined in her post: ease of deployment and, perhaps more excitingly, “Can it run anywhere?”.

The ability to deploy, and run, our software library on constrained hardware platforms hinges on a number of considerations related to our R&D process, from research and algorithm design, to implementation and testing. One such consideration is our purposeful decision not to rely on any third-party code (whether that is open or closed source) in our SDK. Owning every single line of code that goes into our compiled libraries means we’re able to ensure a high degree of compatibility across hardware platforms and compiler toolchains.

Getting silly with it

While ensuring that our software library delivers a high degree of portability is very important to us, it doesn’t necessarily sound particularly exciting, or even impressive, if your world is not centered around working with machine learning algorithms on constrained hardware. So, in order to illustrate (and show off, a little bit) just how portable Ekkono’s machine learning SDK really is, I set myself a challenge: to get our stuff running on the most ubiquitous computer of all time (according to Guiness World Records), the Commodore 64.

However, before I get into the details of running our machine learning algorithms on a C64, I want to offer a bit of context. Our machine learning library suite is (at current) implemented and distributed as three separate-but-integrated subsystems: Edge, Primer, and Crystal. Edge is the core of our software offering, providing our full suite of machine learning algorithms, data pre-processing pipelines, and model evaluation tools. Its primary use is to serve as a machine learning backbone for smart applications running on relatively capable systems with a mature C++ toolchain (e.g., everything from a Raspberry Pi to a cloud-hosted server). Primer is a closely-tied companion to Edge, providing further functionality aimed at engineers and data scientists developing machine learning solutions intended to be deployed using Edge, such as data augmentation and automated machine learning (AutoML) tools. Crystal – which will be our main focus for the remainder of this post – acts as Edge’s smaller sibling. When running Edge on the target platform isn’t feasible, we can simply export our models to the Crystal format, which lets us perform much of the same machine learning magic on much more constrained hardware, requiring only a C toolchain.

Coming back to our Commodore 64 challenge, I will highlight a couple of things regarding the hardware itself, as well as the surrounding software development support. First of all, the C64 runs a MOS Technology 6502 microprocessor at 1 MHz, and offers (as its name suggests) 64 kilobytes of RAM, of which approximately 50 kilobytes can be made available to an application not requiring the BASIC interpreter. Second, while era-appropriate C64 applications most likely would have been developed using BASIC or 6502 assembly, modern-day developers have the luxury of being able to compile C-code for the Commodore 64’s 6502 processor using, e.g., the cc65 or vbcc6502 compilers (with little or no support for compiling C++). Given these constraints on hardware (in particular, available memory) and compiler toolchain, getting Ekkono’s machine learning tools fired up on a C64 quite naturally translates into compiling and running our Crystal library on the decades-old computer.

If you are curious about the somewhat unorthodox look of our particular C64, it happens to be a C64G model, released exclusively on the European market with an updated lighter-colored look, as well as a revised motherboard design. In addition, if you want to get a feel for the age of the C64 computer, this particular specimen was manufactured in West Germany – a country that hasn’t existed since 1990.

Compilers, Compilers, Compilers

On a personal note, this seemingly ambitious side-project felt not only as a challenge we Ekkonoiseurs collectively posed to ourselves, but also as a personal challenge as happily volunteered to make this whole thing happen. As a data scientist, my day-to-day comfort zone usually starts and ends with working with the Ekkono SDK through the Python-bindings we offer for the Primer, Edge and Crystal libraries, and my C programming experience feels just as limited as it does dated. Similarly, growing up as a “console kid” in the 1990’s, I had rarely even touched a Commodore computer before taking up this challenge.

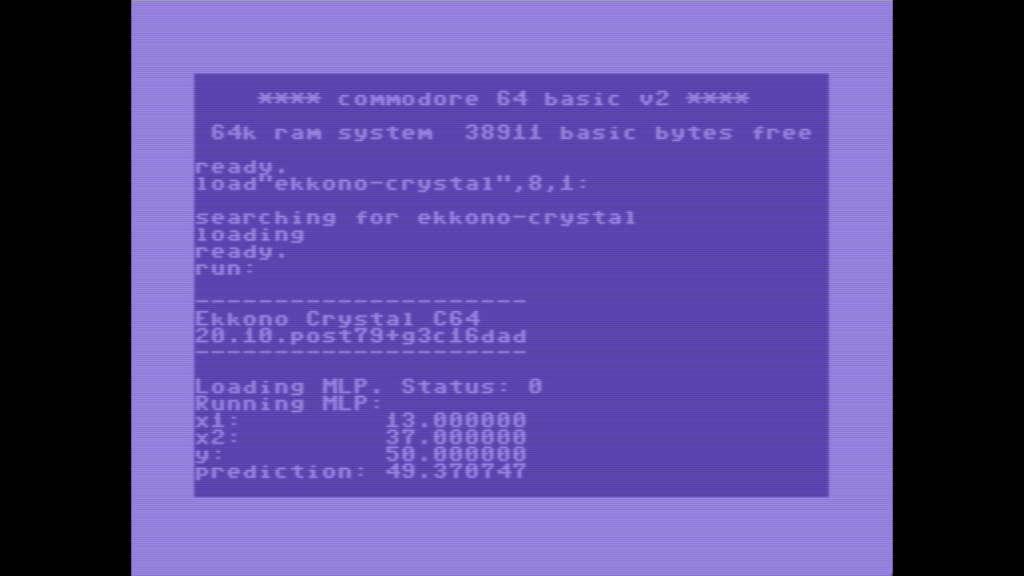

The initial plan of attack was made as simple as possible: train a predictive model (a tiny neural network) to perform addition of two numbers using Primer/Edge, then export the model configuration to the Crystal format so that we can load the model in a C-project using the Crystal API. From there, we should be able to make predictions (i.e. add two input numbers together) by running the neural network on a C64, as long as we are able to compile the Crystal library for the hardware. After selecting and configuring a suitable C-compiler for the 6502 processor (vbcc6502), getting the simple Ekkono Crystal project to run in the VICE C64 emulator turned out, to my surprise and joy, to be relatively straight-forward. Below is a screenshot of this initial simple project running in the emulator: our pre-trained neural network is loaded using the Crystal API, and two random numbers are generated and fed through the network, producing a reasonable output that’s very close to the expected ground truth.

Now, after having made sure that we can indeed compile our library for the Commodore 64, there is of course quite a few improvements left to make: we need to get our demo application running on real hardware and, naturally, we need to work a bit on the looks. Most importantly though, running a pre-trained neural network on the C64 just doesn’t seem exciting enough. What is more exciting than running a pre-trained neural network on the 8-bit machine? Training a neural network on the 8-bit machine! We can just as easily accomplish this using the Crystal library, by (just like before) specifying an incrementally trained neural network using Edge/Primer and then exporting the untrained network to the Crystal format. After loading the network using Crystal on the C64, we can then train the network incrementally one training example at a time, and see how the neural network’s performance improves in real-time.

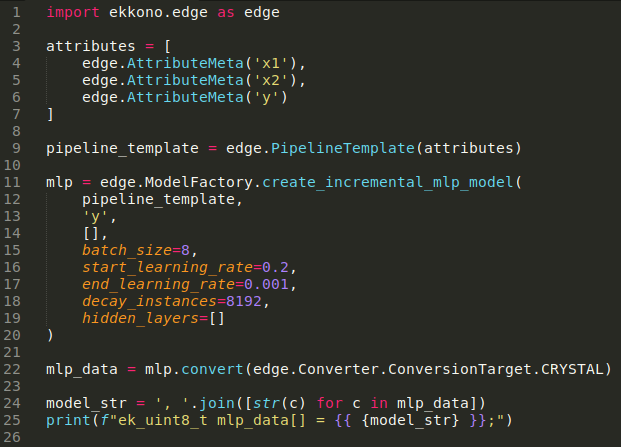

Below you can see the simple Python-script in which we define the incremental neural network (MLP for multi-layer perceptron), and export the neural network to the Crystal binary format. The resulting binary data (here just under 100 bytes simply printed to the terminal) contains a description of the neural network architecture, any integrated pre-processing steps to take when feeding data through the network, as well as specifications for all the relevant training algorithm settings, such as training batch size and learning rate.

There are a couple of interesting details to point about the way we handle model specifications using our Crystal binary format (details that are also applicable to the separate model binary format we use for pure Edge-models). First of all, since we pass around models as simple packets of binary data, they’re completely decoupled from the specific platform they are being run on. This means that if I decided to upgrade my Commodore 64 to, say, an Amiga 500, I could move my neural network with me, keeping everything my neural network model has learned across hardware generations. Similarly, if I would decide that my neural network model needs to be more powerful, or less resource demanding, I could simply side-load a new model binary (e.g. through an over-the-air update) without having to recompile any parts of my application. I think that is pretty neat!

It´s Alive

So, armed with our incremental neural network binary, a compiler that can produce a C64-compatible application with the Crystal library, an SD-card reader that connects to the Commodore 64, and a whole slew of cables and adapters (in a true Frankensteinian fashion), we are finally ready to get our application running on the real thing. Lo and behold, it is alive and kicking! Here we’re feeding training examples to the neural network, one at a time, consisting of two pseudo-randomly generated numbers as the input, and their sum as the output. Between generating input data, training the neural network, and printing results to the screen, the C64 is able to process a little over one training example per second.

Before we get to the pièce de résistance – a short video showing the demo application in action – I would like put forward one final digression. Aside from the mess of cables and connectors (including four separate pieces of hardware only to capture the Commodore 64’s video output), the steps we took to get Ekkono Crystal running on the C64 are the same as we would take to develop smart functionality using machine learning on any embedded platform, including the microcontrollers shown in the first picture of this post. Of course, when integrating smart functionality on modern microcontrollers connected to sensors monitoring physical equipment, the number of interesting, functional, and valuable types of applications we can develop increases by many orders of magnitude. Using a neural network to perform simple addition might not seem particularly practical, but when paired with appropriate sensing equipment, the Ekkono machine learning libraries help you kick-start the process of building embedded applications for condition monitoring, system control, smart warning systems, and more. Whether you choose to run those applications on a Commodore 64, or something more recent, is entirely up to you.

So, if you ever find yourself wondering whether you can run professionally developed machine learning software on your embedded platform, the answer is probably yes – as long as you have a C99-compliant compiler, and your hardware is at least as powerful as a 40-year-old computer, Ekkono’s got your back.